Linux is not perfect. Chances are when installing packages from repositories, some error will show up -- more commonly the conflict between packages. This prevents the install or update of the packages due to the conflicts. On rare occassions, there would be times when yum may fail to complete transactions. This is the time when you will see a similar error message as below:

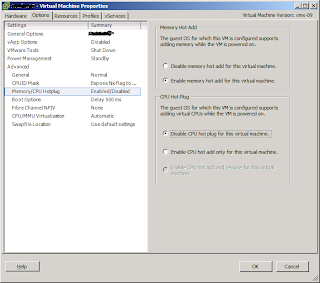

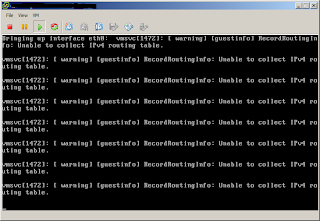

.. or this screenshot with the error message..

Sometimes a modified version of the message appears in this manner:

This latter message is a little bit more meaningful in that it gives you a clue that the executable "yum-complete-transaction" is supplied by the yum-utils rpm package. Install this rpm if required.

WARNING: Although yum itself is suggesting to run "yum-complete-transaction", do not run it. If you could avoid running it, do so. Running "yum-complete-transaction" without knowing what the previous transaction was may wipe out your whole system. It could be disastrous.

I know it is very inconvinient to have the error message show up in the yum transactions but if that is what's bothering you, take the safer mode and execute yum-complete-transaction with the argument "--cleanup-only". The exact command to execute is:

This way yum will never make any change to your system. Again, do not run yum-complete-transaction if you don't have a backup of your system. You may end up wiping the contents of your drive. I have seen this happen several times that I have learned my lesson -- from both my mistakes and the mistakes of others.

There are unfinished transactions remaining. You might consider running yum-complete-transaction first to finish them.

.. or this screenshot with the error message..

Sometimes a modified version of the message appears in this manner:

There are unfinished transactions remaining. You might consider running yum-complete-transaction first to finish them. The program yum-complete-transaction is found in the yum-utils package.

This latter message is a little bit more meaningful in that it gives you a clue that the executable "yum-complete-transaction" is supplied by the yum-utils rpm package. Install this rpm if required.

WARNING: Although yum itself is suggesting to run "yum-complete-transaction", do not run it. If you could avoid running it, do so. Running "yum-complete-transaction" without knowing what the previous transaction was may wipe out your whole system. It could be disastrous.

I know it is very inconvinient to have the error message show up in the yum transactions but if that is what's bothering you, take the safer mode and execute yum-complete-transaction with the argument "--cleanup-only". The exact command to execute is:

# yum-complete-transaction --cleanup-only

This way yum will never make any change to your system. Again, do not run yum-complete-transaction if you don't have a backup of your system. You may end up wiping the contents of your drive. I have seen this happen several times that I have learned my lesson -- from both my mistakes and the mistakes of others.