In the previous post, PySpark and Jupyter Notebooks, we got PySpark (Spark Python Big Data API) and jupyter notebook to work in tandem. Thus, we are now able to leverage the power of Spark which is massively parallel processing (MPP), thereby utilizing all cores of the server (or cluster). Execution can scale across several cluster nodes as you want, and as many cores as you want. It is worthy to note that it doesn't necessarily require Hadoop to run.

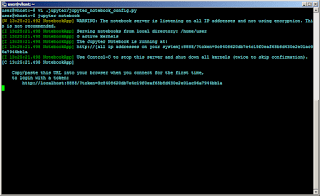

If you can recall, the execution of [jupyter notebook] with an open IP address showed: WARNING: The notebook server is listening on all IP addresses and not using encryption. This is not recommended. Indeed, while running this on the internal network is acceptable, it does not conform to best practice.

This "might" be acceptable for an internal development setup but is more critical for a public cloud setup. Note that "might" is not acceptable for production notebook servers. So it is better to address the issue.

For added security, let's password-protect the jupyter notebook so that only user's that know the password are able to use the pyspark setup. On the terminal, execute [jupyter notebook password]. Input the password, and repeat when asked.

This protects the jupyter notebook with a password, to keep unauthorized users from incidental access. However, packets are transmitted in plain-text and could be sniffed. The next half of the procedure, solves this problem and completes the resolution of the WARNING message.

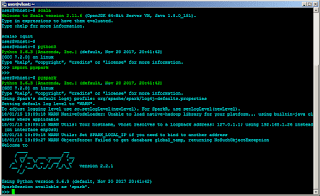

Generate the needed certificate for use with the jupyter notebook server. Execute [openssl req -x509 -nodes -days 365 -newkey rsa:1024 -keyout jupyter.pem -out jupyter.pem] to create a self-signed certificate. The command will ask for other details, that's up to you to fill out. I would move the cerfiticate inside the .jupyter directory, so in this procedure that will be considered.

Recall, that we added several lines to the generated jupyter notebook configuration (/home/user/.jupyter/jupyter_notebook_config.py). In that same file add this line:

The modified configuration encapsulates traffic between the jupyter notebook server and the browser in SSL encryption. And it solves the WARNING. All that needs to be done is restart the notebook application.

RELATED: Set-Up PySpark (Spark Python Big Data API)

Notice that the token is not required by the connection to the jupyter notebook server. This is due to it being protected by password and encapsulated in SSL encryption. This should set you up with a working PySpark installation for multi-threaded data crunching. Next up, let's solve this issue using SSH tunnels instead.

If you can recall, the execution of [jupyter notebook] with an open IP address showed: WARNING: The notebook server is listening on all IP addresses and not using encryption. This is not recommended. Indeed, while running this on the internal network is acceptable, it does not conform to best practice.

This "might" be acceptable for an internal development setup but is more critical for a public cloud setup. Note that "might" is not acceptable for production notebook servers. So it is better to address the issue.

For added security, let's password-protect the jupyter notebook so that only user's that know the password are able to use the pyspark setup. On the terminal, execute [jupyter notebook password]. Input the password, and repeat when asked.

This protects the jupyter notebook with a password, to keep unauthorized users from incidental access. However, packets are transmitted in plain-text and could be sniffed. The next half of the procedure, solves this problem and completes the resolution of the WARNING message.

Generate the needed certificate for use with the jupyter notebook server. Execute [openssl req -x509 -nodes -days 365 -newkey rsa:1024 -keyout jupyter.pem -out jupyter.pem] to create a self-signed certificate. The command will ask for other details, that's up to you to fill out. I would move the cerfiticate inside the .jupyter directory, so in this procedure that will be considered.

Recall, that we added several lines to the generated jupyter notebook configuration (/home/user/.jupyter/jupyter_notebook_config.py). In that same file add this line:

c.NotebookApp.certfile = '/home/user/.jupyter/jupyter.pem'

The modified configuration encapsulates traffic between the jupyter notebook server and the browser in SSL encryption. And it solves the WARNING. All that needs to be done is restart the notebook application.

RELATED: Set-Up PySpark (Spark Python Big Data API)

Notice that the token is not required by the connection to the jupyter notebook server. This is due to it being protected by password and encapsulated in SSL encryption. This should set you up with a working PySpark installation for multi-threaded data crunching. Next up, let's solve this issue using SSH tunnels instead.