For this I would need the jupyter notebook development environment. For those not familiar with the jupyter environment or what it does, the developers have come up with fantastic documentation in this link. The miniconda3 that was previously installed before is perfect for this. Jupyter integrates right into this. Simply invoke [conda install jupyter --yes], to install jupyter notebook. This will install a lot of python libraries, and will definitely take a while depending on your internet connection.

Again, as mentioned the documentation of jupyter is fantastic. If you refer to the quickstart guides and follow along, the details will get you up and running in no time. For this guide, let's jump a bit forward into the configuration and generate a default configuration. Execute [jupyter notebook --generate-config] on the terminal.

As seen from the screen capture above, the default configuration file is written to the user's home directory inside a newly created hidden directory ".jupyter". Inside, there will be only one file -- "jupyter_notebook_config.py". Modify this config file such that it allows the jupyter notebook to be accessed by any remote computer on the network.

Insert the following lines of code (start at line #2):

c = get_config()

c.NotebookApp.ip = '*'

c.NotebookApp.port = 8888

c.NotebookApp.open_browser = False

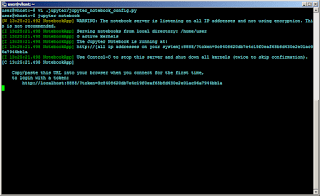

After inserting the lines above, test the configuration by running [jupyter notebook] on a terminal.

Once you see the terminal above, where it says "Copy/paste this URL into your browser when you connect..", the jupyter configuration is now good to go. There is one problem, however -- when you close the terminal the jupyter session dies. This is where "screen" comes in. Execute [jupyter notebook] in a screen session and detach the session.

Just so you have an idea what PySpark can do, I have tried sorting 8M rows of a timeseries pyspark dataframe and it took roughly ~4s to execute. Try this with a regular pandas dataframe and it will take minutes to complete. It doesn't stop there, there are lots more it can do.

RELATED: Data Science -- Where to Start?

Having a working PySpark setup residing on a powerful server that is accessible via web is one powerful tool in the arsenal of a data scientist. Next time, let's heed the warning about the jupyter notebook being accessible by everyone -- how? Soon..